Explore music concepts

and texts from the Polifonia Project.

The corpus in numbers: All

documents

624.117

sentences

24.173.326

tokens

450.861.842

types

179.224.624

links

128.905.937

entities

0

Learn more About

the corpus of the Project.

The Polifonia Textual Corpus is a plurilingual diachronic corpus focused on Musical Heritage (MH) covering Italian, English, French, Spanish and Dutch. Natural LanguageProcessing (NLP) techniques were used to process the corpus and produce automatic morphosyntactic, semanticand MH-specific annotations. Custom APIs have been developed and released to enable domain experts, scholars and music professionals to leverage the annotations produced to perform advanced structured queries on the corpus.The available interrogation capabilities overcome the basic keyword-based search, offering the possibility of querying the corpus by taking advantage of the advanced semantic and MH-specific information encoded in the annotation.

Data

The Polifonia Textual Corpus data, metadata and statistics, along with its annotations and interrogation tools are part of the Polifonia Ecosystem and oblige the Ecosystem’s rulebook. They are released through the dedicated Polifonia Corpus GitHub repository and interactive website. The corpus is released under CC BY license as a set of metadata that allows the reproduction of the whole corpus. We remark that the texts included in the corpus are not published in their integral form because they are subject to heterogeneous licensing. As Deliverable 4.1 details, the initial intention of producing a textual corpus on MH of around 1 million words for five languages (English, French, German, Italian and Spanish) underwent a substantial adjustment during the unfolding of the project. The large modularized corpus developed overcame the original expectations per dimension (it eventually contains more than 100 million words for each language) and per language coverage (it also includes Dutch). A significant part of the sources of the corpus was only available as images or pdf files. We leveraged Optical Character Recognition (OCR), state-of-the-art technology that addresses this problem, to convert them in a processable format. Together with the corpus, WP4 also developed a specialized lexicon, extensively described in D4.1.

The Polifonia Lexicon is a linguistic resource representing the MH-specific terminology and concepts in six languages, organized in the style of WordNet, as sense-equivalent classes called synsets, each associated with its lexicalizations.

Modules

The Polifonia Textual Corpus is at the same time representative of discourse on Musical Heritage in different languages through an historical perspectives, and also specific to single case studies represented by the Pilots. Heterogeneous requirements lead the corpus to be organized into four modules: The Encyclopedic Module, The BookModule, The Periodical Module and The Pilots Module. Each module has its features and development methodologies. Each module (except the Pilot module) contains documents in six languages: Dutch (NL), English (EN), French (FR), German (DE),Italian (IT) and Spanish (ES).

Learn more:

It was created selecting from BabelNet domains all the Wikipedia musical pages.

The metadata of the module can be downloaded from:

| lang | url |

|---|---|

| DE | |

| EN | |

| ES | |

| FR | |

| IT | |

| NL |

The data of the module can be downloaded from:

| lang | url |

|---|---|

| DE | |

| EN | |

| ES | |

| FR | |

| IT | |

| NL |

Some statistics of the module are provided below:

| lang | #documents | #sentences | #tokens | #types | #links | entities |

|---|---|---|---|---|---|---|

| DE | 53.986 | 1.459.265 | 44.523.547 | 9.732.779 | 12.561.177 | 2.197.438 |

| EN | 250.413 | 7.362.272 | 198.257.649 | 1.191.901 | 54.059.979 | 25.786.043 |

| ES | 57.891 | 1.247.583 | 36.229.557 | 537.465 | 7.171.759 | 2.996.185 |

| FR | 65.970 | 2.901.295 | 82.979.944 | 653.489 | 19.208.818 | 6.212.997 |

| IT | 77.986 | 1.548.981 | 47.497.487 | 491.500 | 14.519.636 | 2.649.949 |

| NL | 36.609 | 1.246.881 | 23.539.528 | 479.962 | 4.716.170 | 2.453.332 |

It was created using the Polifonia Textual Corpus Population module that allows to access different digital libraries (such as BNF and BNE) and to select from them documents related to music. The PTCPM allows also to perform optical character recognition (OCR) on images and PDF files.

The metadata of the module can be downloaded from:

| lang | url |

|---|---|

| DE | |

| EN | |

| ES | |

| FR | |

| IT | |

| NL |

The data of the module cannot be downloaded due to copyright issue. However, it is possible to reconstruct the corpus using the metadata provided in the previous section. Furthermore, the data processed and annotated can be accessed interrogating the corpus (how to interrogate the corpus is explained in a README.md file inside the interrogation folder of this repository).

Some statistics of the module are provided below:

| lang | #documents | #sentences | #types | #tokens |

|---|---|---|---|---|

| DE | 237 | 38.633 | 121.530 | 489.225 |

| EN | 360 | 49.595 | 185.280 | 940.232 |

| ES | 41.093 | 731.606 | 1.852.430 | 20.180.197 |

| FR | 265 | 633.173 | 1.305.283 | 14.354.611 |

| IT | 12200 | 202.730 | 405.099 | 2.571.090 |

| NL | 83 | 116.593 | 539.102 | 1.779.824 |

It was created with the help of musicologists that provided the titles of different influencial music periodicals.

The metadata of the module can be downloaded from:

| lang | url |

|---|---|

| DE | |

| EN | |

| ES | |

| FR | |

| IT | |

| NL |

The data of the module cannot be downloaded due to copyright issue. However, it is possible to reconstruct the corpus using the metadata provided in the previous section. Furthermore, the data processed and annotated can be accessed interrogating the corpus (how to interrogate the corpus is explained in a README.md file inside the interrogation folder of this repository).

Some statistics of the module are provided below:

| lang | #documents | #sentences | #types | #tokens |

|---|---|---|---|---|

| DE | 705 | 121.113 | 544.376 | 2.405.289 |

| EN | 2.868 | 4.400.015 | 7.342.527 | 76.180.398 |

| ES | 455 | 87.025 | 677.041 | 3.170.480 |

| FR | 349 | 329.166 | 696.427 | 5.111.915 |

| IT | 1.251 | 433.465 | 992.902 | 7.879.459 |

| NL | 125 | 187.350 | 716.506 | 3.880.499 |

It was created collecting the textual material selected by five Polifonia Pilots:

The metadata of the module can be downloaded from:

| Pilot | url |

|---|---|

| BELLS | |

| CHILD | |

| MEETUPS | |

| MUSICBO | |

| ORGANS |

The data of the Pilots Module of the Polifonia textual Corpus collected for Bells, MusicBo and Organs pilots cannot be published in their integral form because they are subject to heterogeneous license restrictions. The respective set of published metadata (see table above) allows for the reproduction of the whole corpora. Texts collected for Child and Meetups Pilots are royalty-free, therefore we report links to retrieve them from their corresponding GitHub repositories:

| Pilot | url |

|---|---|

| CHILD | https://github.com/polifonia-project/documentary-evidence-benchmark/tree/main/corpus |

| MEETUPS | https://github.com/polifonia-project/meetups_pilot/tree/main/cleanText |

However, it is possible to reconstruct the corpus using the metadata provided in the previous section. Furthermore, the data processed and annotated can be accessed interrogating the corpus (how to interrogate the corpus is explained in a README.md file inside the interrogation folder of this repository).

Some statistics of the module are provided below:

| pilot | #documents | #sentences | #types | #tokens |

|---|---|---|---|---|

| BELLS | 59 | 18.481 | 128.061 | 434.439 |

| CHILD | 30 | 157.815 | 361.550 | 3.442.840 |

| MEETUPS | 19.476 | 822.861 | 1.631.371 | 21.536.135 |

| MUSICBO | 46 | 51.781 | 289.247 | 1.412.456 |

| ORGANS | 1.660 | 25.647 | 45.298 | 368.439 |

Annotations

The Polifonia Textual Corpus was processed using top-notch Natural Language Processing technologies in order to automatically extrapolate the implicit morphosyntactic, semantic and domain-specific information contained in it. This process, called corpus annotation, consists in encoding such information in a structured form to enable analysts (linguists, musicologists, scholars and the general public) to interrogate the corpus by performing sophisticated queries. In case of sources available as images or pdfs file, the NLP technologies employed to produce the annotations were applied to the version of the documents converted into a processable format by the custom piece of OCR software1 released within Deliverable 4.1.

The annotations of the corpus was produced using cutting-edge Natural Language Processing technologies. We annotated each text of the corpus with a NLP pipeline composed of:

Steps 1-4 and 6 have been conducted using the SpaCy NLP library. For each language of the corpus we used a dedicated SpaCy Model:

| lang | model name |

|---|---|

| DE | de_core_news_lg |

| EN | en_core_web_trf |

| ES | es_core_news_lg |

| FR | fr_core_news_lg |

| IT | it_core_news_lg |

| NL | nl_core_news_lg |

Steps 5 and 7 require more sophisticated technologies. For this reason, we used EWISER for step 5 (Word Sense Disambiguation) for English. The other languages of the corpus have been annotated using a new system developed within the project. It exploits recent advantages on lexical semantics and in particular on the representation of word senses (ARES) and a powerful WSD model (WSD-games). The advantages of this new model are based on the fact that it is accurate, fast and can work efficiantly on different languages. For step 7 we used ExTenD for English. For the other language of the corpus we adapted WSD-games to work on the entity linking task. Also in this case the model is accurate, fast and can work efficiantly on different languages.

The annotations of the Polifonia Textual Corpus are provided in CoNLL-U format. Given an input sentence (from the English Wikipedia module) such as:

James H. Mathis Jr. (born August 1967), known as Jimbo Mathus, is an American singer-songwriter and guitarist, best known for his work with the swing revival band Squirrel Nut Zippers.

the resulting annotation will start with metadata information:

#polifonia_doc_id = 32607842_bn___02615097n.html

Tha provides an unique identifier for the document and in this case is composed of two identifiers: the first one is the BabelNet id of the corresponding Wikipedia page (32607842_bn), the second part is the Wikipedia identifier of the page (02615097n).

#polifonia_sent_id = sent_0

Then there is a progressive number for each sentence the document.

#sent = James H. Mathis Jr. (born August 1967), known as Jimbo Mathus, is an American singer-songwriter and guitarist, best known for his work with the swing revival band Squirrel Nut Zippers.

And then there is the text of the sentence.

Afther the metadata there is the sentence annotation:

| token_id | word form | lemma | POS | WordNet sense | NER class | NER BIO tag | Entity Linking ------- | is a musical concept? |

|---|---|---|---|---|---|---|---|---|

| token_0 | James | James | PROPN | PERSON | B | James H. Mathis Jr. | 0 | |

| token_1 | H. | H. | PROPN | PERSON | I | 0 | 0 | |

| token_2 | Mathis | Mathis | PROPN | PERSON | I | 0 | 0 | |

| token_3 | Jr. | Jr. | PROPN | PERSON | I | 0 | 0 | |

| token_4 | ( | ( | PUNCT | O | 0 | 0 | ||

| token_5 | born | bear | VERB | wn:02518161v | O | 0 | 0 | |

| token_6 | August | August | PROPN | DATE | B | August 1967 | 0 | |

| token_7 | 1967 | 1967 | NUM | DATE | I | 0 | ||

| token_8 | ) | ) | PUNCT | O | 0 | 0 | ||

| token_9 | , | , | PUNCT | O | 0 | 0 | ||

| token_10 | known | know | VERB | wn:01426397v | O | 0 | 0 | |

| token_11 | as | as | ADP | O | 0 | 0 | ||

| token_12 | Jimbo | Jimbo | PROPN | PERSON | B | Jimbo Mathus | 0 | |

| token_13 | Mathus | Mathus | PROPN | PERSON | I | 0 | 0 | |

| token_14 | , | , | PUNCT | O | 0 | 0 | ||

| token_15 | is | be | AUX | O | 0 | 0 | ||

| token_16 | an | an | DET | O | 0 | 0 | ||

| token_17 | American | american | ADJ | wn:02927512a | NORP | B | United States | 0 |

| token_18 | singer | singer | NOUN | wn:10599806n | O | 0 | 1 | |

| token_19 | - | - | PUNCT | O | 0 | 0 | ||

| token_20 | songwriter | songwriter | NOUN | wn:10624540n | O | 0 | 1 | |

| token_21 | and | and | CCONJ | O | 0 | 0 | ||

| token_22 | guitarist | guitarist | NOUN | wn:10151760n | O | 0 | 1 | |

| token_23 | , | , | PUNCT | O | 0 | 0 | ||

| token_24 | best | well | ADV | wn:00011093r | O | 0 | 0 | |

| token_25 | known | know | VERB | wn:00596644v | O | 0 | 0 | |

| token_26 | for | for | ADP | O | 0 | 0 | ||

| token_27 | his | his | PRON | O | 0 | 0 | ||

| token_28 | work | work | NOUN | wn:05755883n | O | 0 | 0 | |

| token_29 | with | with | ADP | O | 0 | 0 | ||

| token_30 | the | the | DET | O | 0 | 0 | ||

| token_31 | swing | swing | NOUN | wn:07066042n | O | 0 | 1 | |

| token_32 | revival | revival | NOUN | wn:01047338n | O | 0 | 0 | |

| token_33 | band | band | NOUN | wn:08240169n | O | 0 | 1 | |

| token_34 | Squirrel | Squirrel | PROPN | ORG | B | Squirrel Nut Zippers | 0 | |

| token_35 | Nut | Nut | PROPN | ORG | I | 0 | 0 | |

| token_36 | Zippers | Zippers | PROPN | ORG | I | 0 | 0 | |

| token_37 | . | . | PUNCT | O | 0 | 0 |

Issues

Have you found any issues or bugs while using the Polifonia Corpus application?

Please, use the following Github link and let us know. It will allow you to submit a new issue to the Polifonia Corpus repository. Github registration is required.

Your contribution is greatly appreciated.

REPORT ISSUE REPORT VIA EMAILLet us Guide you through

the interrogation of the corpus.

The interactive dashboard of this application website has been created to allow easy access to the Polifonia Corpus, with the employment of a user-friendly design that reminds of a music player. Different parameters have been implemented to select, navigate and store sentences of the corpus that satisfy a specific query. A default set of parameters has been established to allow a first round of interrogations. However, it is possible to rearrange parameters according to the user needs. In particular, four different types of interrogations (2) have been implemented, to determine peculiar linguistic investigations of the corpus.

Dashboard

The parameters are explained below and their use is described in the following sections:

1. Query input

In this input form you can digit the word to be used for the interrogation, e.g., guitar. The input comes also with autocompletion, populated by words coming from the Polifonia Lexicon.The user can either select one the suggested words or write their own. Pressing the return key after a word runs the interrogation.

2. Type select

It allows for selection of interrogation type. It indicates the type of interrogation that has to be

conducted over the inputted query word.

The keyword search can be used to select the sentences in the corpus that contain that specific

keyword. The matching between the keyword and the results is literal.

The lemma search can be used to select the sentences in the corpus that contain variations of the

input canonical form or lemma.

The concept search is similar to the keyword search but instead of searching the corpus using

keywords it uses concepts (the specific sense of a word), exploiting the sense annotation

of the corpus. Before seeing results, the user has to choose one of the available word senses

of the inputted word.

The entity search is similar to the concept search but instead of searching the corpus using

word senses it uses named entities (as specified in a knowledge base, Wikipedia in our case),

exploiting the sense annotation of the corpus.

3. Module select

It allows for selection of corpus module. It ndicates what module of the corpus has to be queried.

It can be Wikipedia, Books, Periodicals or Pilots. Pilots include: MusicBo, Child, Meetups, Bells

and Organs. The query will be then conducted on the set of texts inside the module.

Each time a module is selected, general statistics in the upper section the web page are updated,

displaying the total number of entries for the specific module.

4. Language select

It allows for selection of module’s language. Each module, except the Pilots, contains documents

in six languages: Dutch (NL), English (EN), French (FR), German (DE),Italian (IT) and Spanish (ES).

5. Number of lines

It indicates the number of sentences to get at each interrogation.

Each line is represented by a sentence.

6. Control panel

The control panel is made of three buttons. The first one (Reset) allows for resetting of the

interrogation parameters, replacing the values with default ones. The second button (Run)

allows for launching the interrogation with the selected parameters. The last button (View)

shows the number of selected sentences, on the right, and allows for restricted view of sentences.

When active, the view is restricted to the selected sentences

7. Download option

This button allows for downloading the results of the interrogation. The user can download a

selection of senteces or the totality of sentences included in the results. Once selected the number

of sentences to be downloaded, it allows for specifing the output file format: sentences can be stored

in textual files, which will just include the full text of the sentences; csv files, which include sentences

and related metadata; csv files, that reproduce the KWIC structure of the web interface.

Results

Results are visualized in an interactive container and are organized according to the parameters explained below.

Their use is described in the following map and legend.

• Visual elements.

• Clickable elements.

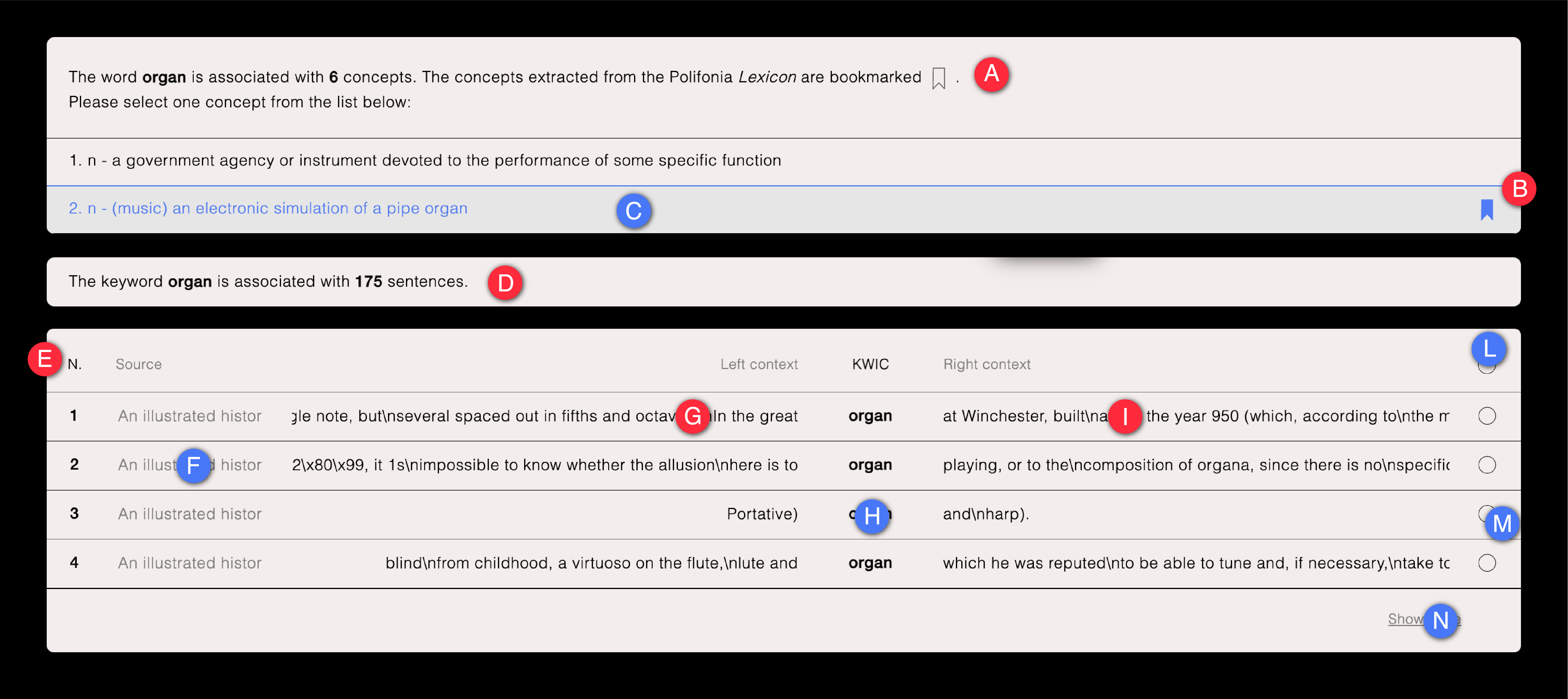

A Selection panel: it shows the total number of association between the query word and the entities or concepts

B Lexicon bookmark: it indicates the synsets extracted from the Polifonia Lexicon

C Selected option: allows for selection of specific named entities or concept

D Results panel: it shows the total number of results associated with the query

E Row number: it shows the identification number of result, according to the row number

F Sentence's source: if clicked it allows for a better displaying of the source info and bibliographic record

G Left context: text content proceeding the concordance line keyword

H Concordance keyword: Key Word In Context (KWIC)

I Right context: text content following the concordance line keyword

L Select all checkbox: if clicked it allows for selection of all lines

M Select sentence checbox: if clicked it allows for selection of single line

N Show more button: if clicked it allows for displaying of 20 + results

Please insert a word in the Query input box

Click on the Run button to start the query and interrogate the Polifonia Corpus

You can report erros by clicking on the Report Error button on the bottom left of this tool.

Additionally, generic issues can be reported via github or email in the about section.